This dependence upon the root account to perform all actions requiring privilege was recognized to be somewhat dangerous in that it was all or nothing and not suited to compartmentalization of roles. Furthermore, it increased the risk of vulnerabilities within a setuid application which may only require root privileges for a very small fraction of its activity such as opening a system file or binding to a privileged port.

This risk was well understood within the open systems community. As a result, IEEE Std.1003.1e (aka POSIX.1e or POSIX.6) was a major effect started in 1995 to develop a standardized set of security interfaces for conforming systems which included access control lists (ACL), audit, separation of privilege (capabilities), mandatory access control (MAC) and information labels.

The work was terminated by IEEE's RevCon in 1998 at draft 17 of the document due to lack of consensus (mostly because of conflicting existing practice.) While the formal standards effort failed, since then much of the draft standard has made its way in the Linux kernel including capabilities which this post explores.

First, what do we mean by Linux capabilities? It is basically an extended verion of the capabilities model described in the draft POSIX.1e standard. Readers familiar with VMS or versions of Unix which include Trusted Computing Base (TCB) will recognize it as being somewhat analogous to as privileges. These capabilities partition the set of root privlileges into a set of distinct logical privileges which may be granted or assigned to processes, users, filesystems and more. As an aside, the term capability originated in a 1966 paper by Jack Dennis and Earl Van Horn (CACM vol 9, #3, pp 143-155, March 1966.) Capabilities can be implemented in many ways including via hardware tags, cryptography, within a programming language (e.g.Java) or using protected address space. For a introduction to capability-based mechanisms go here. Linux uses protected address space and extended file attributes to implement capabilities.

A capability flag is an attribute of a capability. There are three capability flags, named permitted (p), effective (e) and inherited (i), associated with each of the capabilities which, by the way, are documented in the <security/capability.h> header.

A capability set consists of a 64-bit bitmap (prior to libcap 2.03 a bitmap was 32-bits.) A process has a capability state consisting of three capability sets, i.e. inheritable (I), permitted (P) and effective (E), with each capability flag implemented as a bit in each of the three bitmaps.

Whenever a process attempts a privileged operation, the kernel checks that the appropriate bit in the effective set is set. For example, when a process tries to set the monotomic clock, the kernel first checks that the CAP_SYS_TIME bit is set in the process effective set.

The permitted set indicates what capabilities a process can use. A process can have capabilities set in the permitted set that are not in the effective set. This indicates that the process has temporarily disabled this capability. A process is allowed to set its effective set bit only if it is available in the permitted set. The distinction between permitted and effective exists so that a process can bracket operations that need privilege.

The inheritable capabilities are the capabilities of the current process that should be inherited by a program executed by the current process. The permitted set of a process is masked against the inheritable set during exec, while child processes and threads are given an exact copy of the capabilities of the parent process.

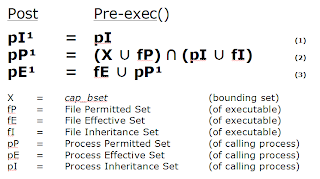

Rather than going into a detailed explaination of forced sets and capability calculations, I refer you to Chris Friedhoff's excellent explanation. However, I have summarized the rules in the following diagram.

For our first example of modifying capabilities consider the ping utility. Many people do not realise that it is a setuid executable on most GNU/Linux systems. It needs to be so because it needs privilege to write the type of packages it uses to probe the network.

$ ls -al /bin/ping

-rwsr-xr-x 1 root root 41784 2008-09-26 02:02 /bin/ping

If you copy ping, it loses its setuid bit and fails to work

$ cp /bin/ping .

$ ls -al ping

-rwxr-xr-x 1 fpm fpm 41784 2009-05-29 20:26 ping

$ ./ping localhost

ping: icmp open socket: Operation not permitted

but works if you become root

# ./ping -c1 localhost

PING localhost.localdomain (127.0.0.1) 56(84) bytes of data.

64 bytes from localhost.localdomain (127.0.0.1): icmp_seq=1 ttl=64 time=0.026 ms

--- localhost.localdomain ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.026/0.026/0.026/0.000 ms

as root add CAP_NET_RAW to the permitted capability and set the legacy effective bit

# /usr/sbin/setcap cap_net_raw=ep ./ping

check what capabilities ping now has

# /usr/sbin/getcap ./ping

./ping = cap_net_raw+ep

and this time ping works without being setuid

$ ls -al ./ping

-rwxr-xr-x 1 fpm fpm 41784 2009-05-29 20:26 ./ping

$ ./ping -c1 localhost

PING localhost.localdomain (127.0.0.1) 56(84) bytes of data.

64 bytes from localhost.localdomain (127.0.0.1): icmp_seq=1 ttl=64 time=0.026 ms

--- localhost.localdomain ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.026/0.026/0.026/0.000 ms

The following example shows how to configure pam_cap to allow the user test to use ping

cat /security/capability.conf

#

# /etc/security/capability.conf

# last edit FPM 05/29/2009

#

## user 'test' can use ping via inheritance

cap_net_raw test

## everyone else gets no inheritable capabilities

none *

next insure that pam_cap.so is required by su

cat /etc/pam.d/su

#%PAM-1.0

auth sufficient pam_rootok.so

# Uncomment the following line to implicitly trust users in the "wheel" group.

#auth sufficient pam_wheel.so trust use_uid

# Uncomment the following line to require a user to be in the "wheel" group.

#auth required pam_wheel.so use_uid

# FPM added pam_cap.so 5/29/2009

auth required pam_cap.so debug

auth include system-auth

account sufficient pam_succeed_if.so uid = 0 use_uid quiet

account include system-auth

password include system-auth

session include system-auth

session optional pam_xauth.so

now set up the capabilities for the ping that we are going to use

no legacy effective bit (e), no enabled privilege

# /usr/sbin/setcap -r ./ping

# /usr/sbin/setcap cap_net_raw=p ./ping

# /usr/sbin/getcap ./ping

./ping = cap_net_raw+p

can I ping as fpm? no.

$ id -un

fpm

$ cd ~test

$ ./ping -q -c1 localhost

ping: icmp open socket: Operation not permitted

can I ping as test? yes.

$ su - test

Password:

$ id -un

test

$ ./ping -q -c1 localhost

PING localhost.localdomain (127.0.0.1) 56(84) bytes of data.

--- localhost.localdomain ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.024/0.024/0.024/0.000 ms

using the previous ping example which was given CAP_NET_RAW

$ id -nu

root

$ /usr/sbin/getcap ./ping

./ping = cap_net_raw+ep

now use capsh to drop CAP_NET_RAW and change to uid 500 (fpm)

the operation is no longer permitted

$ /usr/sbin/capsh --drop=cap_net_raw --uid=500 --

$ id -nu

fpm

$ ./ping -q -c1 localhost

bash: ./ping: Operation not permitted

permanently drop CAP_NET_RAW

$ /usr/sbin/setcap -r ./ping

and invoke ping using capsh --caps

$ capsh --caps="cap_net_raw-ep" -- -c "./ping -c1 -q localhost"

PING localhost.localdomain (127.0.0.1) 56(84) bytes of data.

--- localhost.localdomain ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.056/0.056/0.056/0.000 ms

use capsh to print out capabilities

$ id -un

fpm

$ /usr/sbin/capsh --print

Current: =

Bounding set =cap_chown,cap_dac_override,cap_dac_read_search,cap_fowner,cap_fsetid,cap_kill,

cap_setgid,cap_setuid,cap_setpcap,cap_linux_immutable,cap_net_bind_service,cap_net_broadcast,

cap_net_admin,cap_net_raw,cap_ipc_lock,cap_ipc_owner,cap_sys_module,cap_sys_rawio,

cap_sys_chroot,cap_sys_ptrace,cap_sys_pacct,cap_sys_admin,cap_sys_boot,cap_sys_nice,

cap_sys_resource,cap_sys_time,cap_sys_tty_config,cap_mknod,cap_lease,cap_audit_write,

cap_audit_control,cap_setfcap,cap_mac_override,cap_mac_admin

Securebits: 00/0x0

secure-noroot: no (unlocked)

secure-no-suid-fixup: no (unlocked)

secure-keep-caps: no (unlocked)

uid=500

All the examples shown above work on Fedora 10 which uses libcap 2.10. If you wish to experiment on other GNU/Linux distributions, it must have a kernel >= 2.6.24 built with CONFIG_FILE_CAPABILITIES=y, a version of libcap >=2.08 and a filesystem that supports extended attributes e.g. ext3 or ext4. Note that CONFIG_CAPABILITIES=y should no longer be required.

Why are not more people aware of and using capabilities? I believe that poor documentation is the primary reason. For example, Fedora 10 is missing the man pages for getpcaps, capsh and pam_cap, and the Fedora Security Guide does not even mention capabilities (or for that matter ACLs!) I cannot but help thinking that capabilities are treated as the poor cousin to SELinux by the Fedora Project.

Well this blog post is getting a bit long so I am going to stop. I have barely scratched the surface of capabilities. After Fedora 11 ships, I will cover capabilities from a programming perspective. Meanwhile, if you want to keep current with what is happening with Linux capabilities, Andrew Morgan's Fully Capable is the one to bookmark.